Preface and Acknowledgments

Peter W. Wood

President,

National Association of Scholars

An irreproducibility crisis afflicts a wide range of scientific and social-scientific disciplines, from epidemiology to social psychology. Improper research techniques, a lack of accountability, disciplinary and political groupthink, and a scientific culture biased toward producing positive results contribute to this plight. Other factors include inadequate or compromised peer review, secrecy, conflicts of interest, ideological commitments, and outright dishonesty.

Science has always had a layer of untrustworthy results published in respectable places and “experts” who are eventually shown to have been sloppy, mistaken, or untruthful in their reported findings. Irreproducibility itself is nothing new. Science advances, in part, by learning how to discard false hypotheses, which sometimes means dismissing reported data that does not stand the test of independent reproduction.

But the irreproducibility crisis is something new. The magnitude of false (or simply irreproducible) results reported as authoritative in journals of record appears to have dramatically increased. “Appears” is a word of caution, since we do not know with any precision how much unreliable reporting occurred in the sciences in previous eras. Today, given the vast scale of modern science, even if the percentage of unreliable reports has remained fairly constant over the decades, the sheer number of irreproducible studies has grown vastly. Moreover, the contemporary practice of science, which depends on a regular flow of large state expenditures, means that the public is, in effect, buying a product rife with defects. On top of this, the regulatory state frequently builds both its cases for regulation and the substance of its regulations on the basis of unproven, unreliable, and sometimes false scientific claims.

In short, many supposedly scientific results cannot be reproduced reliably in subsequent investigations and offer no trustworthy insight into the way the world works. A majority of modern research findings in many disciplines may well be wrong.

That was how the National Association of Scholars summarized matters in our report The Irreproducibility Crisis of Modern Science: Causes, Consequences, and the Road to Reform (2018).1 Since then we have continued our work to press for reproducibility reform by several different avenues. In February 2020, we co-sponsored with the Independent Institute an interdisciplinary conference on Fixing Science: Practical Solutions for the Irreproducibility Crisis, to publicize the irreproducibility crisis, exchange information across disciplinary lines, and canvass (as the title of the conference suggests) practical solutions for the irreproducibility crisis.2 We have also provided a series of public comments in support of the Environmental Protection Agency’s rule Strengthening Transparency in Pivotal Science Underlying Significant Regulatory Actions and Influential Scientific Information.3 We have publicized different aspects of the irreproducibility crisis by way of podcasts and short articles.4

And we have begun work on our Shifting Sands project. This report is the first of four that will appear as part of Shifting Sands, each of which will address the role of the irreproducibility crisis in different areas of federal regulatory policy. Here we address a central question that arose after we published The Irreproducibility Crisis.

You’ve shown that a great deal of science hasn’t been reproduced properly and may well be irreproducible. How much government regulation is actually built on irreproducible science? What has been the actual effect on government policy of irreproducible science? How much money has been wasted to comply with regulations that were founded on science that turned out to be junk?

This is the $64 trillion dollar question. It is not easy to answer. Because the irreproducibility crisis has so many components, each of which could affect the research that is used to inform regulatory policy, we are faced with a maze of possible sources of misdirection.

The authors of Shifting Sands include these just to begin with:

- malleable research plans;

- legally inaccessible data sets;

- opaque methodology and algorithms;

- undocumented data cleansing;

- inadequate or non-existent data archiving;

- flawed statistical methods, including p-hacking;

- publication bias that hides negative results; and

- political or disciplinary groupthink.

Each of these could have far-reaching effects on government regulatory policy—and for each of these, the critique, if well-argued, would most likely prove that a given piece of research had not been reproduced properly—not that it actually had failed to reproduce. (Studies can be made to “reproduce,” even if they don’t really.) To answer the question thoroughly, one would need to reproduce, multiple times, to modern reproducibility standards, every piece of research that informs governmental regulatory policy.

This should be done. But it is not within our means to do so.

What the authors of Shifting Sands did instead was to reframe the question more narrowly. Governmental regulation is meant to clear a high barrier of proof. Regulations should be based on a very large a body of scientific research, the combined evidence of which provides sufficient certainty to justify reducing Americans’ liberty with a government regulation. What is at issue is not any particular piece of scientific research, but rather whether the entire body of research provides so great a degree of certainty as to justify regulation. If the government issues a regulation based on a body of research that has been affected by the irreproducibility crisis so as to create the false impression of collective certainty (or extremely high probability), then, yes, the irreproducibility crisis has affected government policy by providing a spurious level of certainty to a body of research that justifies a government regulation.

The justifiers of regulations based on flimsy or inadequate research often cite a version of what is known as the “precautionary principle.” This means that, rather than basing a regulation on science that has withstood rigorous tests of reproducibility, they base the regulation on the possibility that a scientific claim is accurate. They do this with the logic that it is too dangerous to wait for the actual validation of a hypothesis, and that a lower standard of reliability is necessary when dealing with matters that might involve severely adverse outcomes if no action is taken.

This report does not deal with the precautionary principle, since it summons a conclusiveness that lies beyond the realm of actual science. We note, however, that invocation of the precautionary principle is not only non-scientific, but is also an inducement to accepting meretricious scientific practice and even fraud.

The authors of Shifting Sands addressed the more narrowly framed question posed above. They applied a straightforward statistical test, Multiple Testing and Multiple Modeling (MTMM), and applied it to a body of meta-analyses used to justify government research. MTMM provides a simple way to assess whether any body of research has been affected by publication bias, p-hacking, and/or HARKing (Hypothesizing After the Results were Known)—central components of the irreproducibility crisis. In this first report, the authors applied this MTMM method to portions of the research underlying the Environmental Protection Agency’s (EPA) PM2.5 regulations—the regulations based upon research affirming that particulate matter smaller than 2.5 microns in diameter has a deleterious effect on human health. The authors found that there was indeed strong evidence that these meta-analyses had been affected by publication bias, p-hacking, and/or HARKing. Their result provides strong evidence that elements of the irreproducibility crisis have led the Environmental Protection Agency to impose burdensome regulations with substantial economic impact based on insufficient scientific support.

That’s the headline conclusion. But it leads to further questions. Why didn’t the EPA use this statistical technique long ago? How exactly does regulatory policy assess scientific research? What precise policy reforms does this research conclusion therefore suggest?

The broadest answer to why the EPA hasn’t adopted this statistical technique for PM2.5 regulations is that the entire discipline of environmental epidemiology depends upon a series of assumptions and procedures, many of which give pause to professionals in different fields—and which should give pause to the layman as well.

- At the most fundamental statistical level, environmental epidemiology has not taken into account the recent challenges posed to the very concept of statistical significance, or the procedures of probability of causation.5 The Shifting Sands authors confined their critique to much narrower ground, but readers should be aware that the statistical foundations underlying environmental epidemiology are by no means secure.

- Environmental epidemiology generally relies on statistical associations between air components and health outcomes, not on direct causal biological mechanisms. Statistical methods matter so much in the debate about regulatory policy because, usually, the only support for regulation lies in such statistical associations.

- Environmental epidemiology relies on unique data sets that are not publicly available. The nature of the discipline provides rationales for this procedure. Environmental epidemiology requires massive amounts of data collected over decades. It is difficult to collect this data even once—much of the data belongs to private organizations, and the data may pose a threat to the privacy of the individuals from whom they were collected. Nevertheless, it is not in any strict sense science to rely on data which are not freely available for inspection.

- Most relevantly for Shifting Sands, environmental epidemiology as a discipline has rejected the need to adjust results for multiple comparisons. In 1990, the lead editorial of Epidemiology bore the title, “No Adjustments Are Needed for Multiple Comparisons.”6 The entire discipline of environmental epidemiology uses procedures that are guaranteed to produce false positives and rejects using well-established corrective procedures. MTMM tests have been available for decades. Genetic epidemiologists adopted them long ago. Environmental epidemiology rejects MTMM tests as a discipline—and because it does, the EPA can say it is simply following professional judgment.

These are serious flaws—and I don’t mean by highlighting them to suggest that environmental epidemiologists haven’t done serious and successful work to keep themselves on the statistical straight-and-narrow. A very large portion of environmental epidemiology consists of sophisticated and successful attempts to ensure that practitioners avoid the biased selection of data, and the discipline also has adopted several procedures to account for aspects of the irreproducibility crisis.7 The discipline does a great deal correctly, for which it should be commended. But the discipline isn’t perfect. It possesses blind-spots that amount to disciplinary groupthink. Americans must not simply defer to environmental epidemiology’s “professional consensus.”

Yet that is what the EPA does—and, indeed, the federal government as a whole. The intention here was sensible—that government should seek to base its views on disinterested experts as the best way to provide authoritative information on which it should act. Yet there are several deep-rooted flaws in this system, which have become increasingly apparent in the decades since the government has developed an extensive scientific-regulatory complex.

- Government regulations do not account for disciplinary group-think.

- Government regulations do not account for the possibility that a group of scientists and governmental regulators, working unconsciously or consciously, might act to skew the consideration of which science should be used to inform regulation.

- Government regulations define “best available science” by the “weight of evidence” standard. This is an arbitrary standard, subject to conscious or unconscious manipulation by government regulators. It facilitates the effects of groupthink and the skewed consideration of evidence.

- Governmental regulations have failed to address fully the challenge of the irreproducibility crisis, which requires a much higher standard of transparency and rigor than was previously considered “best acceptable science.”

- The entire framework of seeking out disinterested expertise failed to take into account the inevitable effects of using scientific research to justify regulations that affect policy, have real-world effect, and become the subject of political debate and action. The political consequences have unavoidably had the effect of tempting political activists to skew both scientific research and the governmental means of weighing scientific research. Put another way, any formal system of assessment inevitably invites attempts to game it.

- To all this we may add the distorting effects of massive government funding of scientific research. The United States federal government is the largest single funder of scientific research in the world; its expectations affect not only the research it directly funds but also all research done in hopes of receiving federal funding. Government experts therefore have it in their power to create a skewed body of research, which they can then use to justify regulation.

Shifting Sands casts a critical eye on the procedures of the field of environmental epidemiology, but it also casts a critical eye on governmental regulatory procedure, which has provided no check to the flaws of the environmental epidemiology discipline, and which is susceptible to great abuse. Shifting Sands is doing work that environmental epidemiologists and governmental regulators should have done decades ago. Their failure to do so is itself substantial evidence of the need for widespread reform, both among environmental epidemiologists and among governmental regulators.

Before I go further, I should make clear the stakes of the “skew” in science that feeds regulation.

A vast amount of government regulation is based on scientific research affected by the irreproducibility crisis. This research includes such salient topics as racial disparity, implicit bias, climate change, and pollution regulation—and every aspect of science and social science that uses statistics. Climate change is the most fiercely debated subject, but the EPA’s pollution regulations are a close second—not least because American businesses must pay extraordinary amounts of money to comply with them. A 2020 report prepared for the Natural Resource Defense Council estimates that American air pollution regulations cost $120 billion per year—and we may take the estimate provided to an environmental advocacy group to be the lowest plausible number.8 The economic consequences carry with them correspondingly weighty political corollaries: the EPA’s pollution regulations constitute a large proportion of the total power available to the federal government. The economic and political consequences of the EPA’s regulations are why we devoted our first Shifting Sands report to PM2.5 regulation.

PM2.5 regulation is not even the largest single issue the irreproducibility crisis has raised with EPA pollution regulations. The largest single issue is the Harvard Six Cities and American Cancer Society (ACS) studies, which provide the basis for much of the EPA’s pollution regulation. All this data is confidential and not publicly available for full reproduction. Any rigorous introduction of transparency requirements, applied retroactively, has the potential to disable much of the last generation of EPA pollution regulations. Any reproducibility reform has the potential to act as a precedent for extraordinarily consequential rollback of existing pollution regulations.

These political consequences lie behind public arguments about “skew.” Critics of the EPA point to the influence of environmental activists on overlapping groups of scientists and regulators, who collectively skew the results of science toward answers that would justify regulation. Defenders of the EPA, by contrast, see industry-employed scientists who skew science to undercut the case for regulation.9 The authors of Shifting Sands are among the former camp—as am I. I find it difficult to comprehend how the gap between environmental science and environmental regulatory policy could have emerged absent such skew.

Such arguments do not necessarily deny the good faith of those accused of skewing science. Humans are capable of good faith and bad faith at the same time: struggling for truth here, taking shortcuts there, and sometimes just knowingly advancing a falsehood on the presumption that the ends justify the means. The intrusion of bad faith is not the vice of only one party. Knowing that people are tempted, we need checks and balances, transparency, and something like an audit trail. Both conscious and unconscious bias play a part, as does sloppiness or deliberate use of bad scientific procedures to obtain preferred policy goals. The NAS would prefer to believe that the mistakes of the EPA derive from sloppiness and unconscious bias, and that a good-faith critique of its practices will be met with an equally good-faith response.

Shifting Sands strengthens the case for policy reforms that would reduce the EPA’s current remit. The authors and I believe that this is the logical corollary of the current state of statistically informed science. I trust that we would favor the rigorous use of MTMM tests no matter what policy result they indicated, and I will endeavor to make good on that principle if MTMM tests emerge that argue against my preferred policies. Those are the policy stakes of Shifting Sands. I hope that its scientific claims will be judged without reference to its likely policy consequences. The possible policy consequences have not pre-determined the report’s findings. We claim those findings are true, regardless of the consequences, and we invite others to reproduce our work.

This report puts into layman’s language the results of several technical studies by members of the Shifting Studies team of researchers, S. Stanley Young and Warren Kindzierski. Some of these studies have been accepted by peer-reviewed journals; others are under submission. As part of NAS’s own institutional commitment to reproducibility, Young and Kindzierski pre-registered the methods of their technical studies. And, of course, NAS’s support for these researchers explicitly guaranteed their scholarly autonomy and the expectation that these scholars would publish freely, according to the demands of data, scientific rigor, and conscience.

This report is only the first of four scheduled reports, each critiquing different aspects of the scientific foundations of federal regulatory policy. We intend to publish these reports separately and as one long report, which will eliminate some necessary duplication in the material of each individual report. The NAS intends these four reports collectively to provide a substantive, wide-ranging answer to the question What has been the actual effect on government policy of irreproducible science?

I am deeply grateful for the support of many individuals who made Shifting Sands possible. The Arthur N. Rupe Foundation provided Shifting Sands’ funding—and, within the Rupe Foundation, Mark Henrie’s support and goodwill got this project off the ground and kept it flying. Four readers invested considerable time and thought to improve this report with their comments: Anonymous, William M. Briggs, David C. Bryant, and Louis Anthony Cox, Jr. David Randall, NAS’s Director of Research, provided staff coordination of Shifting Sands—and, of course, Stanley Young has served as Director of the Shifting Sands Project. Reports such as these rely on a multitude of individual, extraordinary talents.

Introduction

Something Has Gone Wrong with Science

An irreproducibility crisis afflicts a wide range of scientific and social-scientific disciplines, from public health to social psychology. Far too frequently, scientists cannot replicate claims made in published research.1 Many improper scientific practices contribute to this crisis, including poor applied statistical methodology, bias in data reporting, fitting the hypotheses to the data, and endemic groupthink.2 Far too many scientists use improper scientific practices, including outright fraud.3

The irreproducibility crisis affects entire scientific disciplines. In 2011, researchers at the National Institute of Statistical Sciences reported that not one of fifty-two claims in a body of observational studies could be replicated in randomized clinical trials.4 In 2012, the biotechnology firm Amgen tried to reproduce 53 “landmark” scientific studies in hematology and oncology; it could only replicate six.5 A 2015 Open Science Collaboration study that analyzed 100 experimental claims published in prominent psychological journals found that only 36% of the replication research produced statistically significant results, versus 97% of the original studies.6

This poses serious questions for policymakers. How many federal regulations reflect irreproducible, flawed, and unsound research? How many grant dollars have funded irreproducible research? In short, how many government regulations based on irreproducible claims harm the common good?

Professional groupthink among nutritionists led the Food and Drug Administration (FDA) to recommend that Americans cut their intake of fat, instead of sugar, to prevent obesity. The FDA’s guidelines were ineffective or outright harmful: the American obesity rate skyrocketed from 13.4% to 35.1% between 1960-62 and 2005-06, and further increased to 45.8% by 2013-16.7

The Federal government spends millions of dollars to train its officials to avoid “implicit bias.”8 The Department of Education cited the same “implicit bias” to justify a Dear Colleague letter strong-arming local school districts to loosen their school discipline policies.9 Yet when researchers tried to replicate the sociology research claiming to prove the existence of “implicit bias,” they couldn’t.10

The Nuclear Regulatory Commission adopted the Linear-No-Threshold (LNT) dose-response model to justify extensive safety regulations to prevent cancer risks. Yet increasing numbers of experimenters have failed to reproduce the research that justified the LNT dose-response model,11 which has been used to support crippling regulations.12

These examples are only the tip of the iceberg. Even that tip suggests that the irreproducibility crisis in science may have inflicted massive damage on federal regulatory policy.

Americans need to know just how bad that damage is, and which reforms can best improve how government regulation assesses scientific research.

The Shifting Sands Project

The National Association of Scholars’ (NAS) project—Shifting Sands: Unsound Science and Unsafe Regulationexamines how irreproducible science negatively affects select areas of government policy and regulation governed by different federal agencies. We also aim to demonstrate procedures by which to detect irreproducible research. We believe government agencies should incorporate these procedures as they determine what constitutes “best available science”—the bureaucratically defined standard that judges which research should inform government regulation.13

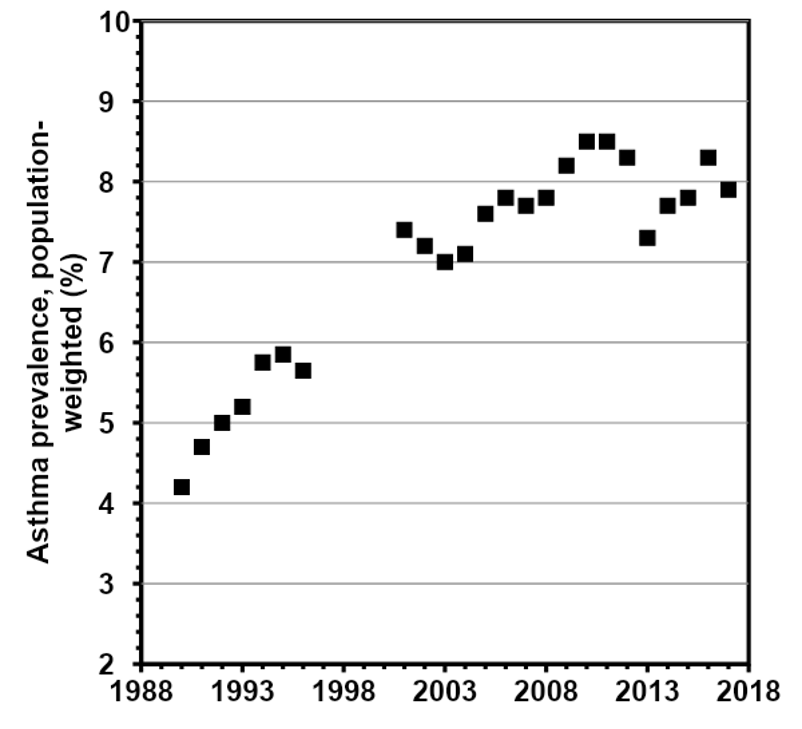

This first policy paper on PM2.5 Regulation focuses on irreproducible research in the field of environmental epidemiology that informs the Environmental Protection Agency’s (EPA) policies and regulations. PM2.5 Regulationspecifically focuses upon the scientific research that associates airborne fine particulate matter smaller than 2.5 microns in diameter (PM2.5) with health effects such as asthma and heart attacks. This research undergirds existing, economically burdensome EPA air pollution regulations. Future reports will examine irreproducible research that informs coronavirus policy at the Centers for Disease Control and Prevention (CDC), nutrition policy at the Food and Drug’s Administration’s (FDA) Center for Food Safety and Applied Nutrition, and implicit bias policy at the Department of Education.

Shifting Sands aims to demonstrate that the irreproducibility crisis has affected so broad a range of government regulation and policy that government agencies should engage in thoroughgoing modernization of the procedures by which they judge “best available science.” Agency regulations should address all aspects of irreproducible research, including the inability to reproduce:

- the research processes of investigations;

- the results of investigations; and

- the interpretation of results.14

In Shifting Sands we will use a single analysis strategy for all of our policy papers: p-value plotting (a form of Multiple Testing and Multiple Modeling) as a way to demonstrate weaknesses in different agencies’ use of meta-analyses. Our common approach supports a comparative analysis across different subject areas, while allowing for a focused examination of one dimension of the impact of the irreproducibility crisis on government agencies’ policies and regulations.

Future investigations into the effects of the irreproducibility crisis on regulatory policy might explore (for example) the consequences of:

- malleable research plans;

- legally inaccessible data sets;15

- opaque methodology and algorithms;

- undocumented data cleansing;

- inadequate or non-existent data archiving;

- flawed statistical methods, including p-hacking (described below);

- publication bias that hides negative results; and

- political or disciplinary groupthink.16

Each of these effects can degrade the reliability of scientific research; jointly, they have greatly reduced public confidence in the reliability of scientific research that underpins federal regulatory policy.

PM2.5 Regulation focuses on one subject matter—PM2.5 regulation—and one methodology—p-value plotting—to critique meta-analyses. The paper contains five sections:

- an introduction to the nature of the irreproducibility crisis;

- an explanation of p-value plotting;

- a history of the EPA’s PM2.5 regulation;

- the results of our examination of environmental epidemiology meta-analyses; and

- our recommendations for policy changes.

Our policy recommendations include both specific technical recommendations directly following from our technical analysis, and broader policy recommendations to address the larger effects of the irreproducibility crisis.

The Irreproducibility Crisis of Modern Science

The Catastrophic Failure of Scientific Replication

Before plunging into the gory details, let us briefly review the methods and procedures of science. The empirical scientist conducts controlled experiments and keeps accurate, unbiased records of all observable conditions at the time the experiment is conducted. If a researcher has discovered a genuinely new or previously unobserved natural phenomenon, other researchers—with access to his notes and some apparatus of their own devising—will be able to reproduce or confirm the discovery. If sufficient corroboration is forthcoming, the scientific community eventually acknowledges that the phenomenon is real and adapts existing theory to accommodate the new observations.

The validation of scientific truth requires replication or reproduction. Replicability (most applicable to the laboratory sciences) most commonly refers to obtaining an experiment’s results in an independent study, by a different investigator with different data, while reproducibility (most applicable to the observational sciences) refers to different investigators using the same data, methods, and/or computer code to reach the same conclusion.17 We may further subdivide reproducibility into methods reproducibility, results reproducibility, and inferential reproducibility.18 Scientific knowledge only accrues as multiple independent investigators replicate and reproduce one another’s work.19

Yet today the scientific process of replication and reproduction has ceased to function properly. A vast proportion of the scientific claims in published literature have not been replicated or reproduced; credible estimates are that a majority of these claims cannot be replicated or reproduced—that they are in fact false.20 An extraordinary number of scientific and social-scientific disciplines no longer reliably produce true results—a state of affairs commonly referred to as the irreproducibility crisis (reproducibility crisis, replication crisis). A substantial majority of 1,500 active scientists recently surveyed by Nature called the current situation a crisis; 52% judged the situation a major crisis and another 38% judged it “only” a minor crisis.21 The increasingly degraded ordinary procedures of modern science display the symptoms of catastrophic failure.22

The scientific world’s dysfunctional professional incentives bear much of the blame for this catastrophic failure.

The Scientific World’s Professional Incentives

Scientists generally think of themselves as pure truth-seekers who seek to follow a scientific ethos roughly corresponding to Merton’s norms of universalism, communality, disinterestedness, and organized skepticism.23 Public trust in scientists24 generally derives from a belief that they adhere successfully to those norms. But this self-conception differs markedly from reality.

Knowingly or unknowingly, scientists respond to economic and reputational incentives as they pursue their own self-interest.25 Buchanan and Tullock’s work on public choice theory provides a good general framework. Politicians and civil servants (bureaucrats) act to maximize their self-interest rather than acting as disinterested servants of the public good.26 This general insight applies specifically to scientists, peer reviewers, and government experts.27 The different participants in the scientific research system all serve their own interests as they follow the system’s incentives.

Well-published university researchers earn tenure, promotion, lateral moves to more prestigious universities, salary increases, grants, professional reputation, and public esteem—above all, from publishing exciting, new, positive results. The same incentives affect journal editors, who receive acclaim for their journal, and personal reputational awards, by publishing exciting new research—even if the research has not been vetted thoroughly.28 Grantors want to fund the same sort of exciting research—and government funders possess the added incentive that exciting research with positive results also supports the expansion of their organizational mission.29 American university administrations want to host grant-winning research, from which they profit by receiving “overhead” costs—frequently a majority of overall research grant costs.30

All these incentives reward published research with new positive claims—but not reproducible research. Researchers, editors, grantors, bureaucrats, university administrations—each has an incentive to seek out the exciting new research that draws money, status, and power, but few or no incentives to double check their work. Above all, they have littleincentive to reproduce the research, to check that the exciting claim holds up—because if it does not, they will lose money, status, and prestige.

Each member of the scientific research system, seeking to serve his or her own interest, engages in procedures guaranteed to inflate the production of exciting, but false research claims in peer-reviewed publications. Collectively, the scientific world’s professional incentives do not sufficiently reward reproducible research. We can measure the overall effect of the scientific world’s professional incentives by analyzing publication bias.

Academic Incentives versus Industrial Incentives

Far too many academics and bureaucrats, and a distressingly large amount of the public, believe that university science is superior to industrial science. University science is believed to be disinterested; industrial science corrupted by the desire to make a profit. University science is believed to be accurate and reliable; industrial science is not.31

Our critique of the scientific world’s professional incentives is, above all, a critique of university science incentives. According to one study, zero out of fifty-two epidemiological claims in randomized trials could be replicated.32 According to another, only 36 of 100 of the most important psychology studies could be replicated.33 Nutritional research, a tissue of disproven claims such as coffee causes pancreatic cancer, has lost much of its public credibility.34 Academic science, both observational and experimental, possesses astonishingly high error rates—and peer and editorial review of university research no longer provides effective quality control.35

Industry research is subject to far more effective quality control. Government-imposed Good Laboratory Practice Standards, and their equivalents, apply to a broad range of industry research—and do not apply to university research.36 Industry, moreover, is subject to the most effective quality control of all—a company’s products must work, or it will go out of business.37 Both the profit incentive and government regulation tend to make industrial science reliable; neither operates upon academic science.

As we will see below, environmental epidemiology regulation is largely based on university research. We should treat it with the same skepticism as we would industry research.

Publication Bias: How Published Research Skews Toward False Positive Results

The scientific world’s incentives for exciting research rather than reproducible research drastically affects which research scientists submit for publication. Scientists who try to build their careers on checking old findings or publishing negative results are unlikely to achieve professional success. The result is that scientists simply do not submit negative results for publication. Some negative results go to the file drawer. Others somehow turn into positive results as researchers, consciously or unconsciously, massage their data and their analyses. Neither do they perform or publish many replication studies, since the scientific world’s incentives do not reward those activities either.38

We can measure this effect by anecdote. One co-author recently attended a conference where a young scientist stood up and said she spent six months trying unsuccessfully to replicate a literature claim. Her mentor said to move on—and that failed replication never entered the scientific literature. Individual papers also recount problems, such as difficulties encountered when correcting errors in peer-reviewed literature.39 We can quantify this skew by measuring publication bias—the skew in published research toward positive results compared with results present in the unpublished literature.40

A body of scientific literature ought to have a large number of negative results, or results with mixed and inconclusive results. When we examine a given body of literature and find an overwhelmingly large number of positive results, especially when we check it against the unpublished literature and find a larger number of negative results, we have evidence that the discipline’s professional literature is skewed to magnify positive effects, or even create them out of whole cloth.41

As far back as 1987, a study of the medical literature on clinical trials showed a publication bias toward positive results: “Of the 178 completed unpublished randomized controlled trials (RCTs)42 with a trend specified, 26 (14%) favored the new therapy compared to 423 of 767 (55%) published reports.”43 Later studies provide further evidence that the phenomenon affects an extraordinarily wide range of fields, including:

- the social sciences generally, where “strong results are 40 percentage points more likely to be published than are null results and 60 percentage points more likely to be written up;”44

- climate science, where “a survey of Science and Nature demonstrates that the likelihood that recent literature is not biased in a positive or negative direction is less than one in 5.2 × 10^-16;”45

- psychology, where “the negative correlation between effect size and samples size, and the biased distribution of p values indicate pervasive publication bias in the entire field of psychology;”46

- sociology, where “the hypothesis of no publication bias can be rejected at approximately the 1 in 10 million level;”47

- research on drug education, where “publication bias was identified in relation to a series of drug education reviews which have been very influential on subsequent research, policy and practice;”48 and

- research on “mindfulness-based mental health interventions,” where “108 (87%) of 124 published trials reported ≥1 positive outcome in the abstract, and 109 (88%) concluded that mindfulness-based therapy was effective, 1.6 times greater than the expected number of positive trials based on effect size.”49

Most relevantly for this report on PM2.5 regulation, publication bias has contributed heavily to the ratio of false positives to false negatives in published environmental epidemiology literature; this ratio is probably at least 20 to 1.50

What publication bias especially leads to is a skew in favor of research that erroneously claims to have discovered a statistically significant relationship in its data.

What is Statistical Significance?

The requirement that a research result be statistically significant has long been a convention of epidemiologic research.51 In hundreds of journals, in a wide variety of disciplines, you are much more likely to get published if you claim to have a statistically significant result. To understand the nature of the irreproducibility crisis, we must examine the nature of statistical significance. Researchers try to determine whether the relationships they study differ from what can be explained by chance alone by gathering data and applying hypothesis tests, also called tests of statistical significance. In practice, the hypothesis that forms the basis of a test of statistical significance is rarely the researcher’s original hypothesis that a relationship between two variables exists. Instead, scientists almost always test the hypothesis that no relationship exists between the relevant variables. Statisticians call this the null hypothesis. As a basis for statistical tests, the null hypothesis is mathematically precise in a way that the original hypothesis typically is not. A test of statistical significance yields a mathematical estimate of how well the data collected by the researcher supports the null hypothesis. This estimate is called a p-value.

It is traditional in environmental epidemiology to use confidence intervals instead of p-values from a hypothesis test to demonstrate statistical significance. As both confidence intervals and p-values are constructed from the same data, they are interchangeable, and one can be estimated from the other.52 Our use of p-values in this report implies they can be (and are) estimated from the confidence intervals used in environmental epidemiology studies.

The Bell Curve and the P-Value: The Mathematical Background

All “classical” statistical methods rely on the Central Limit Theorem, proved by Pierre-Simon Laplace in 1810.

The theorem states that if a series of random trials are conducted, and if the results of the trials are independent and identically distributed, the resulting normalized distribution of actual results, when compared to the average, will approach an idealized bell-shaped curve as the number of trials increases without limit.

By the early twentieth century, as the industrial landscape came to be dominated by methods of mass production, the theorem found application in methods of industrial quality control. Specifically, the p-test naturally arose in connection with the question “how likely is it that a manufactured part will depart so much from specifications that it won’t fit well enough to be used in the final assemblage of parts?” The p-test, and similar statistics, became standard components of industrial quality control.

It is noteworthy that during the first century or so after the Central Limit Theorem had been proved by Laplace, its application was restricted to actual physical measurements of inanimate objects. While philosophical grounds for questioning the assumption of independent and identically distributed errors existed (i.e., we can never know for certain that two random variables are identically distributed), the assumption seemed plausible enough when discussing measurements of length, or temperatures, or barometric pressures.

Later in the twentieth century, to make their fields of inquiry appear more “scientific”, the Central Limit Theorem began to be applied to human data, even though nobody can possibly believe that any two human beings—the things now being measured—are truly independent and identical. The entire statistical basis of “observational social science” rests on shaky supports, because it assumes the truth of a theorem that cannot be proved applicable to the observations that social scientists make.

A p-value estimated from a confidence interval is a number between zero and one, representing a probability based on the assumption that the null hypothesis is actually true.53 A very low p-value means that, if the null hypothesis is true, the researcher’s data are rather extreme—surprising, because a researcher’s formal thesis when conducting a null hypothesis test is that there is no association or difference between two groups. It should be rare for data to be so incompatible with the null hypothesis. But perhaps the null hypothesis is not true, in which case the researcher’s data would not be so surprising. If nothing is wrong with the researcher’s procedures for data collection and analysis, then the smaller the p-value, the less likely it is that the null hypothesis is correct.

In other words: the smaller the p-value, the more reasonable it is to reject the null hypothesis and conclude that the relationship originally hypothesized by the researcher does exist between the variables in question. Conversely, the higher the p-value, and the more typical the researcher’s data would be in a world where the null hypothesis is true, the less reasonable it is to reject the null hypothesis. Thus, the p-value provides a rough measure of the validity of the null hypothesis—and, by extension, of the researcher’s “real hypothesis” as well.54 Or it would, if a statistically significant p-value had not become the gold standard for scientific publication.55

Why Does Statistical Significance Matter?

The government’s central role in science, both in funding scientific research and in using scientific research to justify regulation, further disseminated statistical significance throughout the academic world. Within a generation, statistical significance went from a useful shorthand that agricultural and industrial researchers used to judge whether to continue their current line of work, or switch to something new, to a prerequisite for regulation, government grants, tenure, and every other form of scientific prestige—and also, and crucially, the essential prerequisite for professional publication.

Scientists’ incentive to produce positive, original results became an incentive to produce statistically significant results. Groupthink, frequently enforced via peer review and editorial selection, inhibits publication of results that run counter to disciplinary or political presuppositions.56 Many more scientists use a variety of statistical practices, with more or less culpable carelessness, including:

- improper statistical methodology;

- consciously or unconsciously biased data manipulation that produces desired outcomes;

- choosing between multiple measures of a variable, selecting those that provide statistically significant results, and ignoring those that do not; and

- using illegitimate manipulations of research techniques.57

Still others run statistical analyses until they find a statistically significant result—and publish the one (likely spurious) result. Far too many researchers report their methods unclearly, and let the uninformed reader assume they actually followed a rigorous scientific procedure.58 A remarkably large number of researchers admit informally to one or more of these practices—which collectively are informally called p-hacking.59 Significant evidence suggests that p-hacking is pervasive in an extraordinary number of scientific disciplines.60 HARKing is the most insidious form of p-hacking.

HARKing: Exploratory Research Disguised as Confirmatory Research

To HARK is to hypothesize after the results are known—to look at the data first and then come up with a hypothesis that provides a statistically significant result.61 Irreproducible research hypotheses produced by HARKing send whole disciplines chasing down rabbit holes, as scientists interpret their follow-up research to conform to a highly tentative piece of exploratory research that was pretending to be confirmatory research.

Scientific advance depends upon scientists maintaining a distinction between exploratory research and confirmatory research, precisely to avoid this mental trap. These two types of research should utilize entirely different procedures. HARKing conflates the two by pretending that a piece of exploratory research has really followed the procedures of confirmatory research.62

Jaeger and Halliday provide a useful brief definition of exploratory and confirmatory research, and how they differ from one another:

Explicit hypotheses tested with confirmatory research usually do not spring from an intellectual void but instead are often gained through exploratory research. Thus exploratory approaches to research can be used to generate hypotheses that later can be tested with confirmatory approaches. ... The end goal of exploratory research ... is to gain new insights, from which new hypotheses might be developed. ... Confirmatory research proceeds from a series of alternative, a priori hypotheses concerning some topic of interest, followed by the development of a research design (often experimental) to test those hypotheses, the gathering of data, analyses of the data, and ending with the researcher’s inductive inferences. Because most research programs must rely on inductive (rather than deductive) logic..., none of the alternative hypotheses can be proven to be true; the hypotheses can only be refuted or not refuted. Failing to refute one or more of the alternative hypotheses leads the researcher, then, to gain some measure of confidence in the validity of those hypotheses.63

Exploratory research, in other words, has few predefined hypotheses. Researchers do not at first know what precisely they’re looking for, or even necessarily where to look for it. They “typically generate hypotheses post hoc rather than test a predefined hypothesis.”64 Exploratory studies can easily raise thousands of separate scientific claims65 and they possess an increased risk of finding false positive associations.

Confirmatory research “tests predefined hypotheses usually derived from a theory or the results of previous studies that can be used to draw firm and often meaningful conclusions.”66 Confirmatory studies ideally should focus on just one hypothesis, to provide a severe test of its validity. In good confirmatory research, researchers control every significant variable.

When multiple questions are at issue, researchers should use procedures such as Multiple Testing and Multiple Modeling (MTMM) to control for experiment-wise error—the probability that at least one individual claim will register a false positive when you conduct multiple statistical tests.67 (For further information about MTMM, see Appendix 1: Multiple Testing and Multiple Modeling (MTMM) and Epidemiology.)

Researchers should state the hypothesis clearly, draft the research protocol carefully, and leave as little room for error as possible in execution or interpretation. Properly conducted, confirmatory research is by its nature far less likely to find false positive associations than original research, and conclusions supported by confirmatory research are correspondingly more reliable.

Researchers resort to HARKing—exploratory research that mimics confirmatory research—not only because it can increase their publication rate but also because it can increase their prestige. HARKing scientists can gain the reputation for an overwhelmingly probable research result when all they have really done is set the stage for more follow-on false positive results or file-drawer negative results.

Another way to define HARKing is that, like p-hacking more generally, it overfits data—it produces a model that conforms to random data.68 Consider, for example, The Life Project, a generations-long British cohort study about human development that provided data for innumerable professional articles in a range of social science and health disciplines, including 2,500 papers drawing solely on data about the cohort born in 1958.69 These 2,500 articles have influenced a wide variety of public policy initiatives, by asserting that X cause is associated with Y effect—for example, that babies born on weekdays thrive better than babies born on weekends.70

But The Life Project never stated any research hypothesis in advance—it simply asked large numbers of questions and searched for possible associations. This is bound to produce false positives—statistical associations produced by pure chance. It is the essence of HARKing: exploratory research masquerading as confirmatory research. The sheer number of associations examined by The Life Project indicates that any claim of an association between a cause and an effect—e.g., weekday babies thrive, weekend babies don’t—should be considered to have no statistical support unless the p-value for an association has been evaluated using Multiple Testing and Multiple Modeling procedures.

Food frequency questionnaires (FFQ) generally suffer the same frailties that afflict cohort studies such as The Life Project. In a typical FFQ, researchers ask people to recall whether they have consumed various specified foods, and in what quantities. Researchers then ask whether they have experienced various specified health events at a much later date. These FFQs suffer from the frailties of human memory—but they also simply ask large numbers of questions and search for possible associations. For example, the 1985 Willett FFQ, notable in nutrition science literature, asked people questions about 61 different foods.71

Two more recent FFQs, typical of the field, respectively ask people about 264 food items and 900 food items.72 As with The Life Project, the sheer number of possible associations, none of which confirmed a prior research hypothesis, are the definition of exploratory research that should be considered to have no statistical support unless the p-value for an association has been evaluated using MTMM procedures.

HARKing, unfortunately, includes yet wider categories of research. When scientists preregister their research, they specify and publish their research plan in advance. All un-preregistered research can be susceptible to HARKing, as it allows researchers to transform their exploratory research into confirmatory research by looking at their data first and then constructing a hypothesis to fit the data, without informing peer reviewers that this is what they did.73 In general, researchers too frequently fail to make clear distinctions between exploratory and confirmatory research, or to signal transparently to their readers the nature of their own research.74

Consequences: Canonization of False Claims

Publication bias, p-hacking, and HARKing collectively have seriously degraded scientific research as a whole. Head, et al. noted that p-hacking pervades virtually every scientific discipline.75 Disciplines such as physics, astronomy, and genome-wide association studies (GWAS) appear to be exceptions to this generalization, but that is because they define significant p-values as several orders of magnitude smaller than 0.05.76 Elsewhere, the effects are stark.

As early as 1975, Greenwald noted that only 6 percent of researchers were inclined to publish a negative result, whereas 60 percent were inclined to publish a positive result—a ratio of ~10 to 1.77 Simonsohn, et al., note that replication does not necessarily support a claim if a field of research has been subject to data manipulation, or has failedto report negative results.78 Many researchers consider the essentially improper procedure of testing many questions using the same data set to be “business as usual”—even though research that does not control for the size of the analysis search space cannot be considered to have any statistical support.79

A false research claim can be canonized as the foundation for an entire body of literature that is uniformly false. Nissen undertook a theoretical analysis and noted that it is possible for a false claim to become an established “truth”—and that it is especially likely in disciplines where publication bias skews heavily in favor of positive studies and against negative studies.80 We may recollect here the 2015 Open Science Collaboration study that failed to replicate 64 of 100 examined “canonical” psychology studies.81 Nissen’s claim, based upon a mathematical model and simulations, appears to have received experimental substantiation in the discipline of psychology. One co-author’s examination of a body of environmental epidemiology literature also supports his thesis.82 It is all too likely to be true throughout the sciences and social sciences.

What Can Be Done?

Modern scientific research’s irreproducibility crisis arises above all from extraordinary amounts of publication bias, p-hacking, and HARKing. We cannot tell exactly which pieces of research have been affected by these errors until scientists replicate every single piece of published research. Yet we do possess sophisticated statistical strategies that will allow us to diagnose specific claims, fields, and literatures that inform government regulation, so that we may provide a severe, replicable test that allows us to quantify the combined effect of p-hacking, HARKing, and publication bias on a claim or a field as a whole.

One such method—an acid test for statistical skullduggery—is p-value plotting.

P-Value Plotting: A Severe Test for Publication Bias, P-hacking, and HARKing

Introduction

We use p-value plotting to test whether a field has been affected by the irreproducibility crisis—by publication bias, p-hacking, and HARKing. In essence, we analyze meta-analyses of research and output their results on a simple plot that displays the distribution of p-value results:

- A literature unaffected by publication bias, p-hacking or HARKing should display its results as a single line.

- A literature which has been affected by publication bias, p-hacking or HARKing should display bilinearity—divides into two separated lines.

P-value plotting of meta-analyses results allows a reader, at a glance, to determine whether there is circumstantial evidence that a body of scientific literature has been affected by the irreproducibility crisis.

We will summarize here the statistical components of p-value plotting. We will begin by outlining a few basic elements of statistical methodology: counting; the definition and nature of p-values; and a simple p-value plotting method, which makes it relatively simple to evaluate a collection of p-values. We will then explain what meta-analyses are, and how they are used to inform government regulation. We will then explain how precisely p-value plotting of meta-analyses works, and what it reveals about the scientific literature it tests.

Counting

Counting can be used to identify which research papers in literature may suffer from the various biases described above. We should want to know how many “questions” are under consideration in a research paper. In a typical environmental epidemiology paper, for example, there are usually several health outcomes at issue, such as deaths from all causes, heart attacks, other cardiovascular deaths, and pulmonary deaths. Researchers consider whether a risk factor, such as the concentration of particular air components, predicts any of these health outcomes—that is to say, whether the concentration of an air component may be “positively” associated with a particular health outcomes.83

When they study air pollution, environmental epidemiologists may analyze six air components: carbon monoxide (CO), nitrogen dioxide (NO2), ozone (O3), sulfur dioxide (SO2), and two sizes of particulate matter—particulate matter 10 micrometers or less in diameter (PM10) and particulate matter 2.5 micrometers or less in diameter (PM2.5). Each component is a predictor, each type of health effect is an outcome. Scientists may further analyze an association between a particular air component and a particular health outcome with reference to categories of analysis such as weather, age, and sex. Researchers call these further categories of analysis covariates; covariates may affect the strength of the association, but they are not the direct objects of study.

An epidemiology paper considers a number of questions equal to the product of the number of outcomes (O) times the number of predictors (P) times 2 to the power of the number of covariates (C). In other words:

the number of questions = O x P x 2^C

This formula approximates the number of statistical tests an epidemiology study performs. The larger the number of statistical tests, the easier it is to find a statistically significant association due solely to chance.

P-values

As we have summarized above, a null hypothesis significance test is a method of statistical inference in which a researcher tests a factor (or predictor) against a hypothesis of no association with an outcome. The researcher uses an appropriate statistical test to attempt to disprove the null hypothesis. The researcher then converts the result to a p-value. The p-value is a value between 0 and 1 and it is a numerical measure of significance. The smaller the p-value, the more significant the result. Significance is the technical term for surprise. When we are conducting a null hypothesis significance test, we should expect no relationship between any particular predictor and any particular outcome. Any association, any departure from the null hypothesis (random chance), should and does surprise us.

If the p-value is small—conventionally in many disciplines, less than 0.05—then the researcher may reject the null hypothesis and conclude the result is surprising and that there is indeed evidence for a significant relationship between a predictor and an outcome. If the p-value is large—conventionally, greater than 0.05—then the researcher should accept the null hypothesis and conclude there is nothing surprising and that there is no evidence for a significant relationship between a predictor and an outcome.

But strong evidence is not dispositive (absolute) evidence. By definition, where p = 0.05, a null hypothesis that is true will be rejected, by chance, 5% of the time. When this happens, it is called a false positive—false positive evidence for the research hypothesis (false evidence against the null hypothesis). The size of the experiment does not matter. When researchers compute a single p-value, both large and small studies have a 5% chance of producing a false positive result.

Such studies, by definition, can also produce false negatives—false negative evidence against the research hypothesis (false evidence for the null hypothesis). In a world of pure science, false positives and false negatives would have equally negative effects on published research. But all the incentives in our summary of the Irreproducibility Crisis indicate that scientists vastly overproduce false positive results. We will focus here, therefore, on false positives—which far outnumber false negatives in the published scientific literature.84

We will focus particularly on how and why conducting a large number of statistical tests produces many false positives by chance alone.

Simulating Random p-values

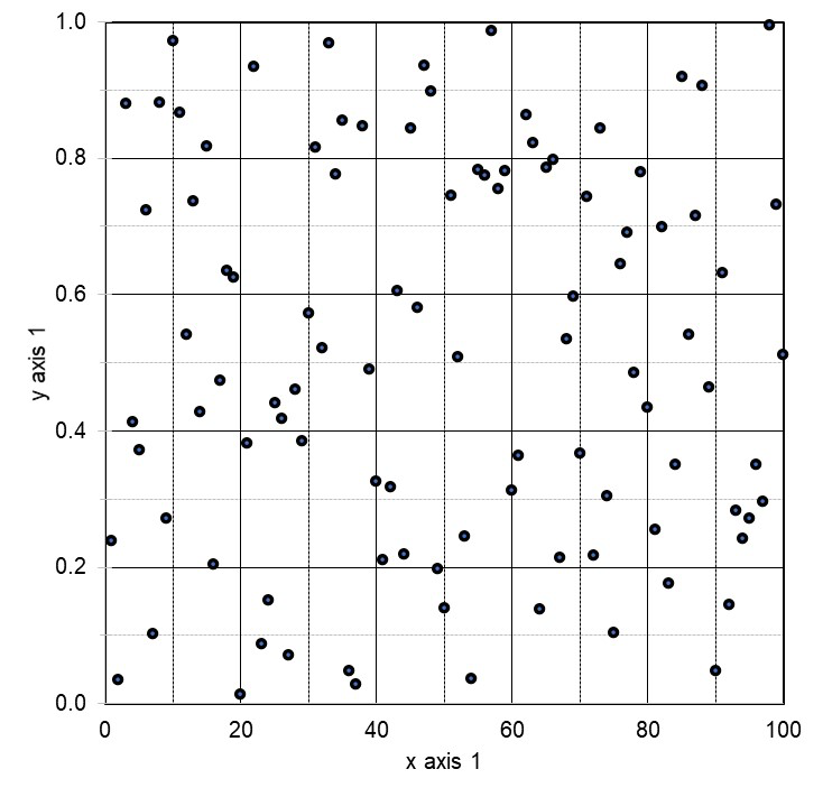

We can illustrate how a large number of statistical tests produce false positives by chance alone by means of a simulated experiment. We can use a computer to generate 100 pseudo-random numbers between 0 and 1 that mimic p-values and enter them into a 5 x 20 table. (See Figure 1.) These randomly generated p-values should be evenly distributed, with approximately 5 results between 0 and 0.05, 5 between 0.05 and 0.10, and so on—approximately, because a randomly generated sequence of numbers should not produce a perfectly uniform distribution.

In Figure 1, we have simulated an environmental epidemiology study analyzing associations between air components and tumors. Remember, these numbers were picked at random.

Figure 1: 100 Simulated p-values

|

Tumor |

1 |

2 |

3 |

4 |

5 |

|

T01 |

0.899 |

0.417 |

0.673 |

0.754 |

0.686 |

|

T02 |

0.299 |

0.349 |

0.944 |

0.405 |

0.878 |

|

T03 |

0.868 |

0.535 |

0.448 |

0.430 |

0.221 |

|

T04 |

0.439 |

0.897 |

0.930 |

0.500 |

0.257 |

|

T05 |

0.429 |

0.082 |

0.038 |

0.478 |

0.053 |

|

T06 |

0.432 |

0.305 |

0.056 |

0.403 |

0.821 |

|

T07 |

0.982 |

0.707 |

0.460 |

0.789 |

0.956 |

|

T08 |

0.723 |

0.931 |

0.827 |

0.296 |

0.758 |

|

T09 |

0.174 |

0.982 |

0.277 |

0.970 |

0.366 |

|

T10 |

0.117 |

0.339 |

0.281 |

0.746 |

0.419 |

|

T11 |

0.433 |

0.640 |

0.313 |

0.310 |

0.482 |

|

T12 |

0.004 |

0.412 |

0.428 |

0.195 |

0.184 |

|

T13 |

0.663 |

0.552 |

0.893 |

0.084 |

0.827 |

|

T14 |

0.785 |

0.398 |

0.895 |

0.393 |

0.092 |

|

T15 |

0.595 |

0.322 |

0.159 |

0.407 |

0.663 |

|

T16 |

0.553 |

0.173 |

0.452 |

0.859 |

0.899 |

|

T17 |

0.748 |

0.480 |

0.486 |

0.018 |

0.130 |

|

T18 |

0.643 |

0.371 |

0.303 |

0.614 |

0.149 |

|

T19 |

0.878 |

0.548 |

0.039 |

0.864 |

0.152 |

|

T20 |

0.559 |

0.343 |

0.187 |

0.109 |

0.930 |

Each box in Figure 1 represents a different statistical test applied to associate a predictor (an air component) with an outcome (a health consequence). The Figure displays results of null hypothesis tests analyzing whether the annual incidence of 20 different tumors observed during a given year for 5 different air components are greater than an expected annual incidence rate of each tumor. Each box represents one out of 100 null hypothesis statistical tests—1 test for each of 20 tumors, applied to 5 different air components. The number in the box represents the p-value of each individual statistical test.

This simulation contains four p-values that are less than 0.05: 0.004, 0.038, 0.038 and 0.018. In other words, by sheer chance alone, a researcher could write and publish four professional articles based on the four “significant” results (p-values less than 0.05). Researchers are supposed to take account of these pitfalls (chance outcomes). There are standard procedures that can be used to prevent researchers from simply cherry-picking “significant” results.85 But it is all too easy for a researcher to set aside those standard procedures, to p-hack, and just report on and write a paper for each result with a nominally significant p-value.

P-hacking by Asking Multiple Questions

As noted above, a standard form of p-hacking is for a researcher to run statistical analyses until a statistically significant result appears—and publish the one (likely spurious) result. When researchers ask hundreds of questions, when they are free to use any number of statistical models to analyze associations, it is all too easy to engage in this form of p-hacking. In general, research based on multiple analyses of large complex data sets is especially susceptible to p-hacking, since a researcher can easily produce a p-value < 0.05 by chance alone.86 Research that relies on combining large numbers of questions and computing multiple models is known as Multiple Testing and Multiple Modeling.87 (See Appendix 1: Multiple Testing and Multiple Modeling (MTMM) and Epidemiology.)

Confirmation bias compounds the difficulties of observing a chance p-value < 0.05. Confirmation bias, frequently triggered by HARKing that falsely conflates exploratory research with confirmatory research, influences researchers so that they are more likely to publish research that confirms a dominant scientific paradigm, such as the association of an air component with a health outcome, and less likely to publish results that contradict a dominant scientific paradigm.

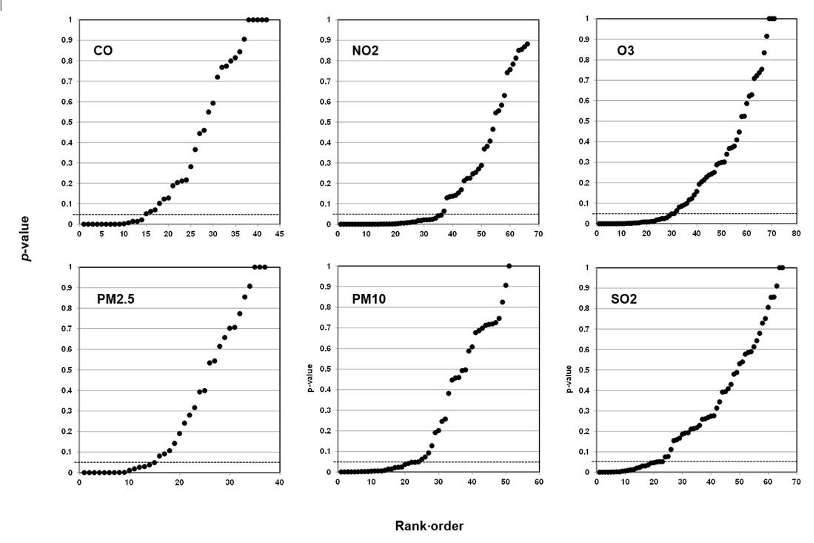

The following example, drawn from our earlier research into the relationship of air components to health effects, illustrates how we should incorporate the role of analysis search space (counting) into this discussion. In Figure 2 we examine the estimated size of analysis search space for eight papers that appeared in a major environmental epidemiology journal.88 Figure 2 gives the number of questions, models and search spaces for these papers listed by first author.

Figure 2: Estimated Size of Analysis Search Space, Eight Environmental Epidemiology Papers

|

RowID |

Author |

Year |

Questions |

Models |

Search Space |

|

1 |

Zanobetti |

2005 |

3 |

128 |

384 |

|

2 |

Zanobetti |

2009 |

150 |

16 |

2,400 |

|

3 |

Ye |

2001 |

560 |

8 |

4,480 |

|

4 |

Koken |

2003 |

150 |

32 |

4,800 |

|

5 |

Barnett |

2006 |

56 |

256 |

14,336 |

|

6 |

Linn |

2000 |

120 |

128 |

15,360 |

|

7 |

Mann |

2003 |

96 |

512 |

49,152 |

|

8 |

Rich |

2010 |

175 |

1,024 |

179,200 |

In Figure 2 above:

- Questions = Outcomes x Predictors;

- Models = 2^Covariates, as a model can include a covariate, but need not; and

- Search Space = Questions x Models.

Any researcher whose study contains a large search space could undertake, but not report, a wide range of statistical tests. The researcher also could use, but not report, different statistical models, before selecting, using, and reporting the results. Figure 2 demonstrates just how large a search space is available for researchers to find and report results with a p-value less than 0.05.

These papers are typical of environmental epidemiology studies. As will be shown later, the median search space across 70 environmental epidemiology papers that we have recently examined is more than 13,000. A typical environmental epidemiology study is expected to have by chance alone approximately 13,000 x 0.05 = 650 “statistically significant” results.

P-value Plots

Now we put together several concepts that we have introduced. When we conduct a null hypothesis statistical test, we can produce a single p-value that can fall anywhere in the interval from 0 to 1, and which is considered “statistically significant” in many disciplines when it is less than 0.05. We also know that researchers often look at many questions and compute many models using the same observational data set, and that this allows them to claim that a small p-value produced by chance substantiates a claim to a significant association.

Consider the following example.89 Researchers made a claim that by eating breakfast cereal a woman is more likely to have a boy baby.90 The researchers conducted a food frequency questionnaire (FFQ) that asked pregnant women about their consumption of 131 foods at two different time points, one before conception and one just after the estimated date of conception. The FFQ posed a total of 262 questions. The researchers obtained a result with a p-value less than 0.05 and claimed they had discovered an association between maternal breakfast cereal consumption and fetal sex ratios. Their procedure made it highly likely that they had simply discovered a false positive association.

We cannot prove that any one such result is a false positive, absent a series of replication experiments. But we can detect when a given result is likely to be a false positive, drawn from a larger body of questions that indicate randomness rather than a true positive association.

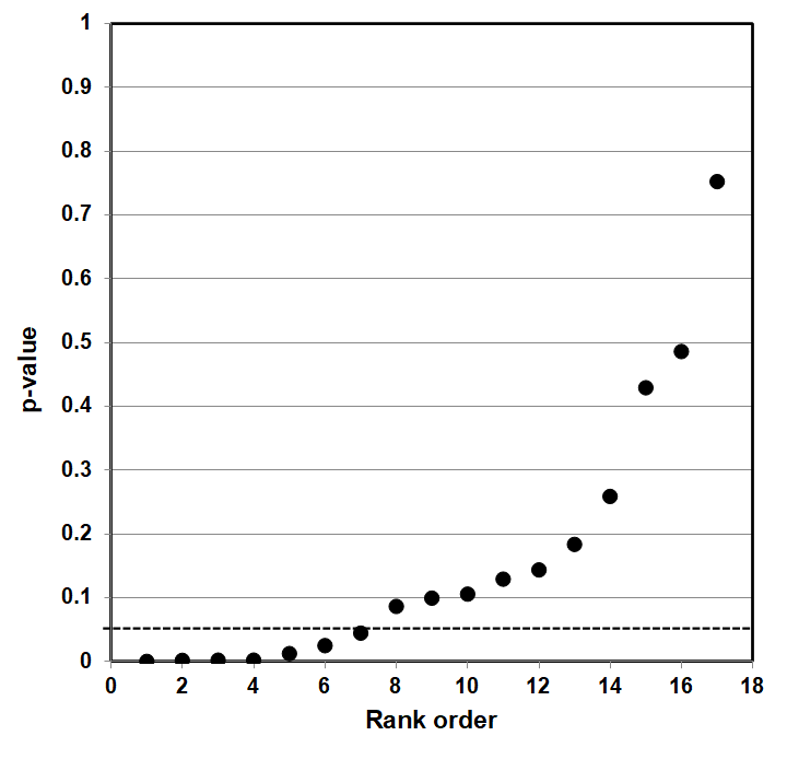

The way to assess a given result is to make a p-value plot of the larger body of results that includes the individual result, and then plot the reported p-values of each of those results. We then use this p-value plot to examine how uniformly the p-values are spread over the interval 0 to 1. We use the following steps to create the p-value plot.

- Rank-order the p-values from smallest to largest.

- Plot the p-values against the integers: 1, 2, 3, …

When we have created the p-value plot, we interpret it like this:

- A p-value plot that forms approximately a 45-degree line (i.e., slope = 1) provides evidence of randomness—a literature that supports the null hypothesis of no significant association.

- A p-value plot that forms approximately a line with slope < 1, where most of the p-values are small (less than 0.05), provides evidence for a real effect—a literature that supports a statistically significant association.

- A p-value plot that exhibits bilinearity—that divides into two lines—provides evidence of publication bias, p-hacking, and/or HARKing.91

Bilinear behavior (bilinearity) in a rank-ordered p-value plot suggests two linear relationships with a clear breakpoint to represent the data of interest. These relationships include a mixture of:

- a set of p-values—which are all less than 0.05—displaying a linear slope much less than 45⁰, and

- a set of p-values—which may range from near 0 to 1—displaying a slope approximately 45⁰.92

Why does a plotted 45-degree line of p-value results provide evidence of randomness? When a researcher conducts a series of statistical tests to test a hypothesis, and there is no significant association, the individual results ought to appear anywhere in the interval 0 to 1. When we rank these p-values and plot them against the integers 1, 2, … , they will produce a 45-degree line that depicts a uniform distribution of results. The differences between the individual results, in other words, differ from one another regularly, and produce collectively a uniform distribution of results.

Whenever we plot a body of linked p-value results, and the results plot to a 45-degree line, that is evidence that an individual result is the result of a random distribution of results—that even a putatively significant association is really only a fluke result, a false positive, where the evidence as a whole supports the null hypothesis of no significant association.

We may take this as evidence of randomness whether we apply it to:

- a series of individual studies focused on one question,

- a series of tests that emerge by uncontrolled testing of a set of different predictors and different outcomes, or

- a series of meta-analyses.93

The null hypothesis assumption is that there is no significant association. This presumption of a random outcome, of no significant association, must be positively defeated in a hypothesis test in order to make a claim of a significant, surprising result.94 The corollary is that an individual result of a significant association can only be taken as reliable if any body of results to which it belongs also positively defeats the p-value plot of a 45-degree line that depicts a uniform distribution of results.95 (For further details for constructing a p-value plot, see Appendix 2: Constructing P-value Plots.)

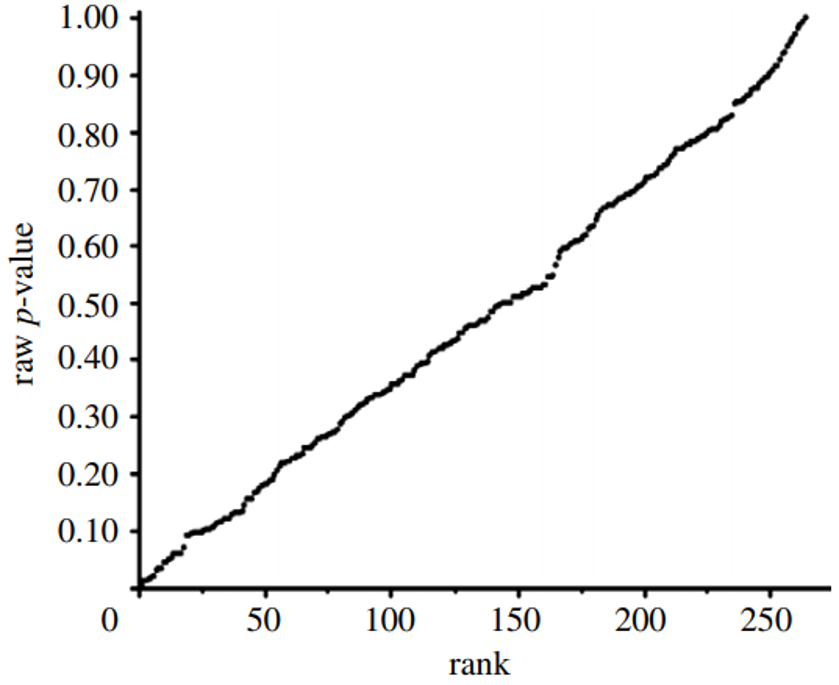

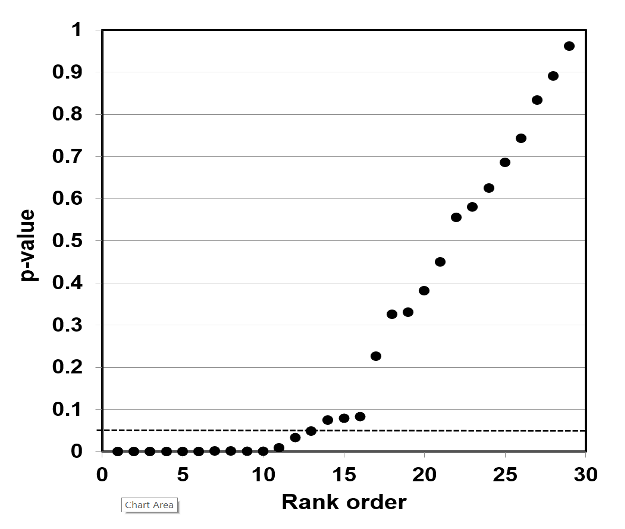

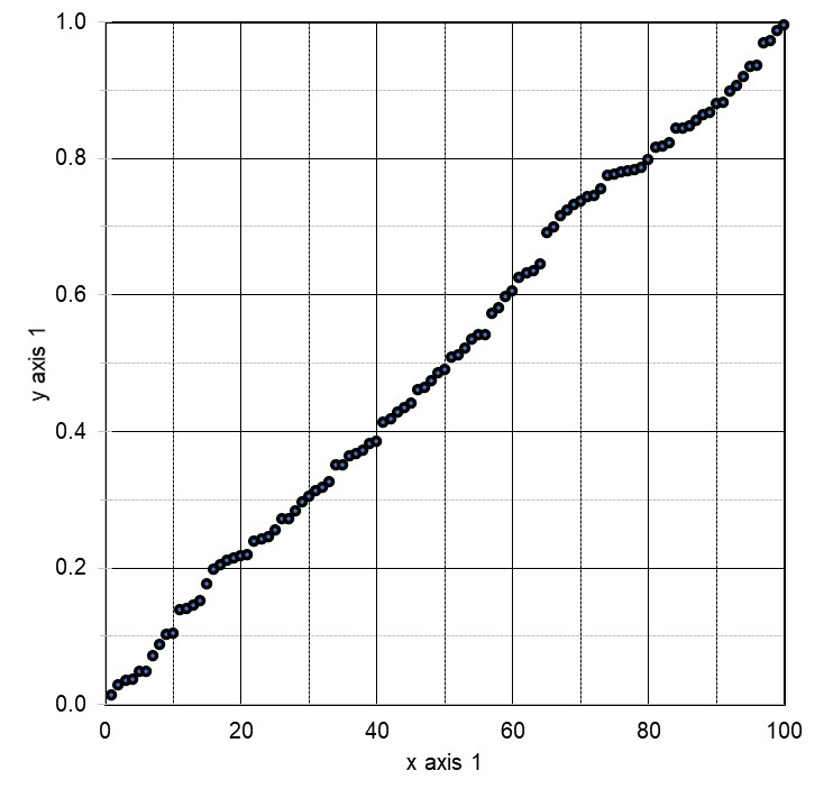

Let us return to the research linking breakfast cereal with increased conception of baby boys. That statistical association was drawn from 262 total questions, each of which produced its own p-value. When we plot the reported p-values of all 262 of those questions, in Figure 3 below, the result is a line of slope 1 (approximately).

Figure 3: P-value Plot, 262 P-values, Drawn from Food Frequency Questionnaire, Questions Concerning Boy Baby Conception96

This line supports the presumption of randomness as a 45-degree line starting at the origin 0,0 would fit the data very well. The small p-value, less than 0.05, registered for the association between breakfast cereal consumption and boy-baby conception, represents a false positive finding.

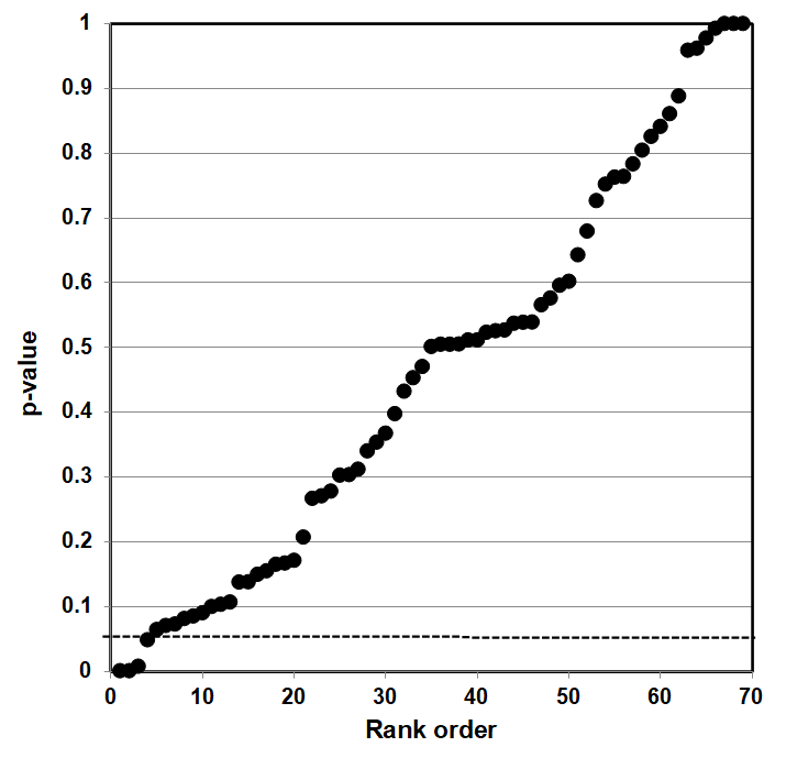

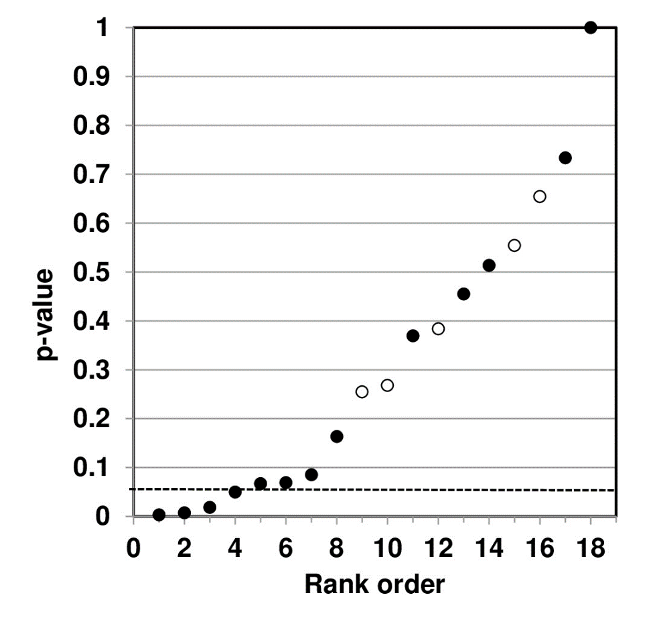

P-value plotting likewise reveals randomness, no significant association, when applied in Figure 4 to a meta-analysis that combined data from 69 questions drawn from 40 observational studies. The claim being evaluated in the meta-analysis was whether long-term exercise training of elderly is positively associated with greater mortality and morbidity (increased accidents and falls and hospitalization due to accidents and falls).

Figure 4: P-value Plot, 69 Questions Drawn From 40 Observational Studies, Meta-analysis of Observational Data Sets Analyzing Association Between Elderly Long-term Exercise Training and Mortality and Morbidity Risk97

Figure 4, as Figure 3, plots the p-values as a sloped line from left to right at approximately 45-degrees, and therefore supports the presumption of randomness. Note that Figure 4 contains four p-values less than 0.05, as well as several p-values close to 1.000. The p-values below p = 0.05 are most likely false positives.

These claims are purely statistical. Researchers can, and will, argue that discipline-specific information justifies treating their particular claim for a statistical association—that “relevant biological knowledge,” for example, supports the claim that there truly is an association between breakfast cereal consumption and boy-baby conception.98

We recognize the possibility where statisticians and disciplinary specialists talk past one another and refuse to engage with the substance of one another’s arguments. But we urge disciplinary specialists, and the public at large, to consider how extraordinarily unlikely it is for a p-value plot indicating randomness to itself be a false positive. The counter-argument that a particular result truly registers a significant association needs to refute the chances against such a 45 degree line appearing if the individual results were not the consequence of selecting false positives for publication.

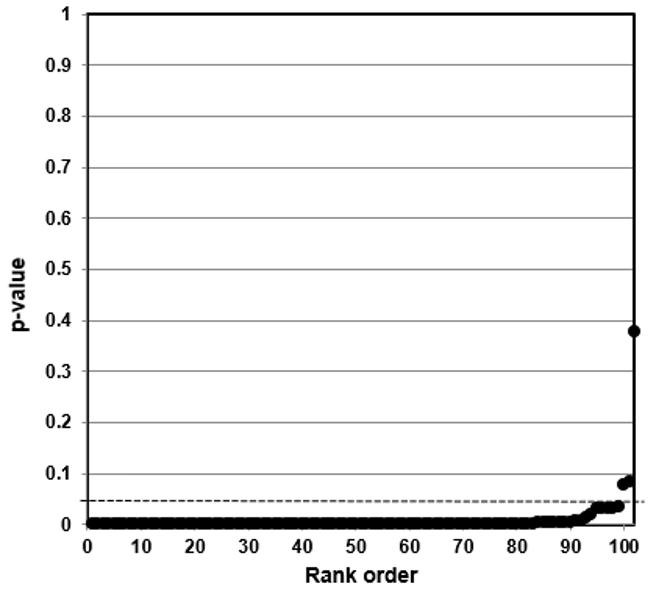

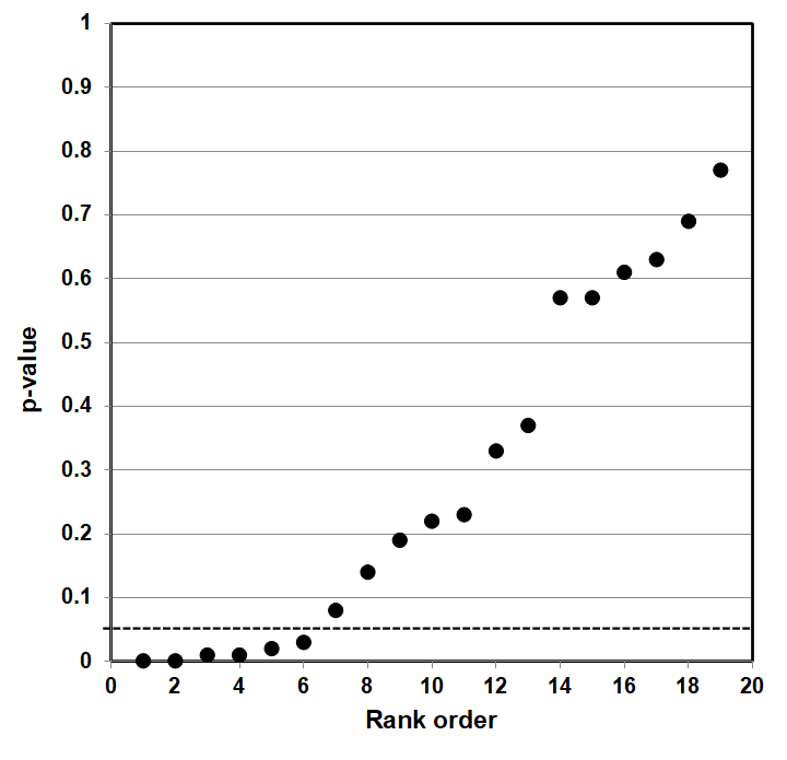

Such a counter-argument should also consider that p-value plotting does register true effects. We applied the same method to produce a p-value plot in Figure 5 of studies that examined a smoking-lung cancer association.

Figure 5: P-value Plot, 102 Studies, Association of Smoking and Squamous Cell Carcinoma of the Lungs99

In this case, the p-value plot did not form a roughly 45-degree line, with uniform p-value distribution over the interval. Instead it formed an almost horizontal line, with the vast majority of the results well below p = 0.01. Only 3 out of 102 p-values were above p = 0.05. One outlying p-value was just below 0.40—which reminds us that even where there is a true strong relationship, a few studies may produce false negatives. Our p-value plot provides evidence that the studies associating smoking and lung cancer had discovered a true association.

Bilinear P-value Plots

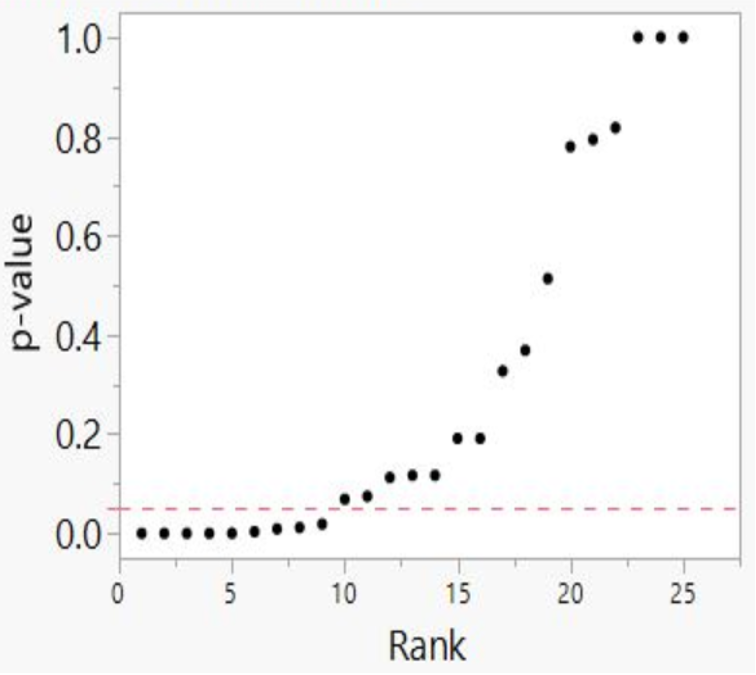

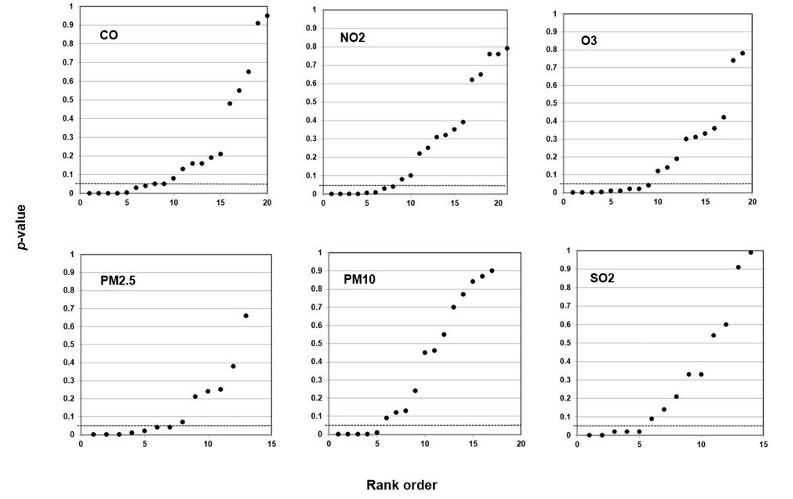

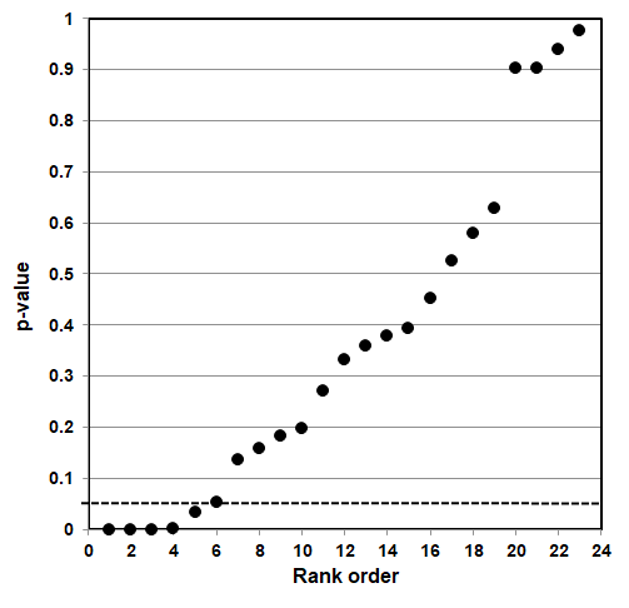

Our method also registers bilinear results (divides into two lines). In Figure 6, we plotted studies that analyze associations between fine particulate matter and the risk of preterm birth or term low birth weight. A 45-degree line as in Figures 3 and 4 indicates randomness, no effect, and therefore strongly suggests that researchers have indulged in HARKing if they claim a positive effect. A bilinear shape instead suggests the possibility of publication bias, p-hacking, and/or HARKing—although there remains some possibility of a true effect.

Figure 6: P-value Plot, 23 Studies, Association of Fine Particulate Matter (PM2.5) and the Risk of Preterm Birth or Term Low Birth Weight100

As we shall explain, such a bilinear plot should usually be interpreted as providing evidence that bias described above has affected a given field, albeit not as strong as the evidence that a 45-degree line provides evidence of no effect. Still, researchers would have good cause to query a claim of an association between fine particulate matter and the risk of preterm birth or term low birth weight, even if a true effect cannot be absolutely ruled out.

Figure 5 demonstrates that our method can detect true associations—it will not come back with a 45-degree line no matter what data you feed into it. When it does detect randomness, as in Figure 3 and 4, the inference is that a particular result is likely to be random, and that the claimed result has failed a statistical test that a true positive body of research passes.